We’re excited to announce that looping for Duties in Databricks Workflows with For Every is now Typically Accessible! This new job kind makes it simpler than ever to automate repetitive duties by looping over a dynamic set of parameters outlined at runtime and is a part of our continued funding in enhanced management stream options in Databricks Workflows. With For Every, you may streamline workflow effectivity and scalability, releasing up time to concentrate on insights relatively than advanced logic.

Looping dramatically improves the dealing with of repetitive duties

Managing advanced workflows typically includes dealing with repetitive duties that require the processing of a number of datasets or performing a number of operations. Knowledge orchestration instruments with out help for looping current a number of challenges.

Simplifying advanced logic

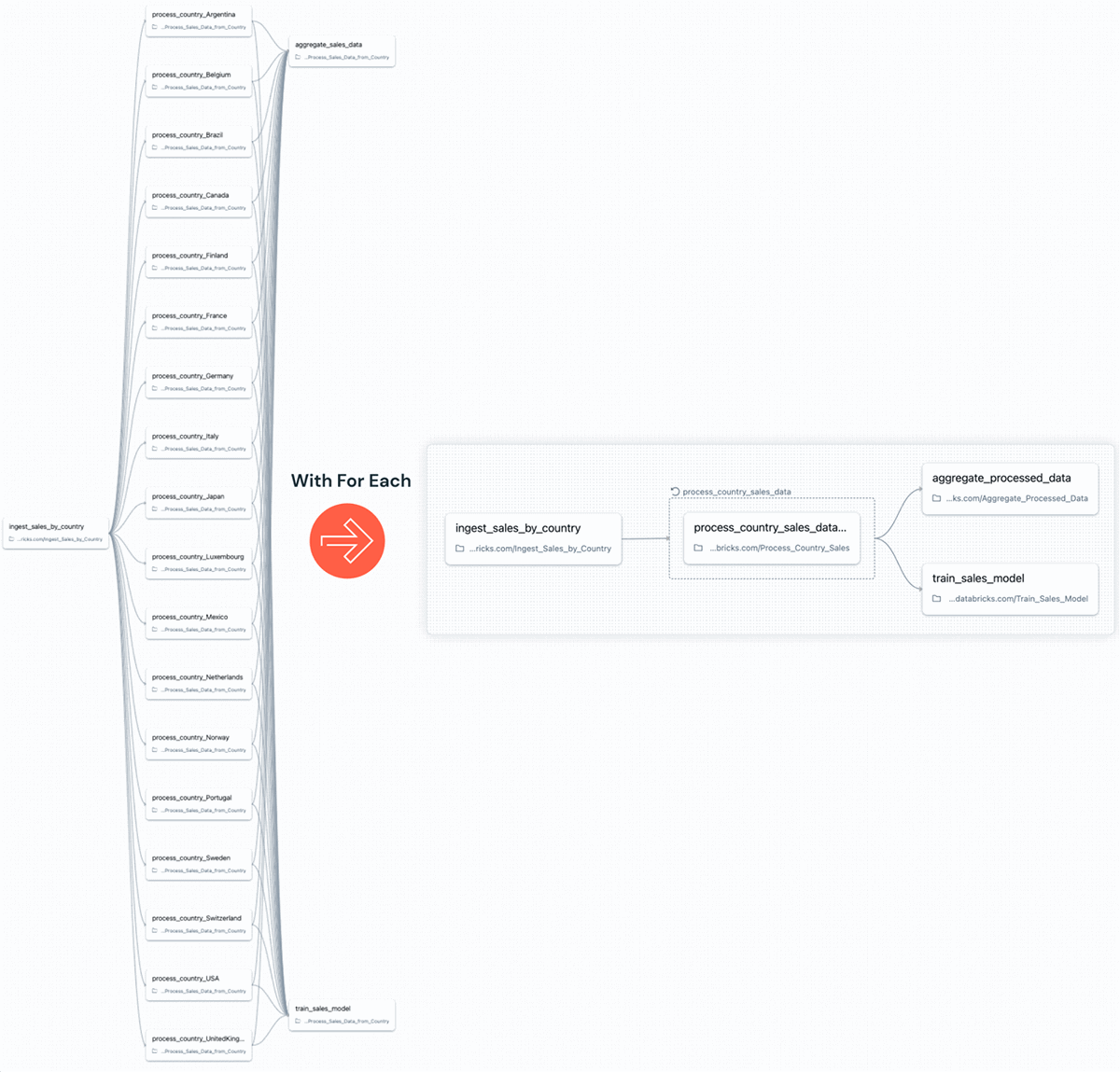

Beforehand customers typically resorted to guide and onerous to take care of logic to handle repetitive duties (see above). This workaround typically includes making a single job for every operation, which bloats a workflow and is error-prone.

With For Every, the sophisticated logic required beforehand is drastically simplified. Customers can simply outline loops inside their workflows with out resorting to advanced scripts to avoid wasting authoring time. This not solely streamlines the method of organising workflows but in addition reduces the potential for errors, making workflows extra maintainable and environment friendly. Within the following instance, gross sales information from 100 completely different nations is processed earlier than aggregation with the next steps:

- Ingesting gross sales information,

- Processing information from all 100 nations utilizing For Every

- Aggregating the information, and prepare a gross sales mannequin.

Enhanced flexibility with dynamic parameters

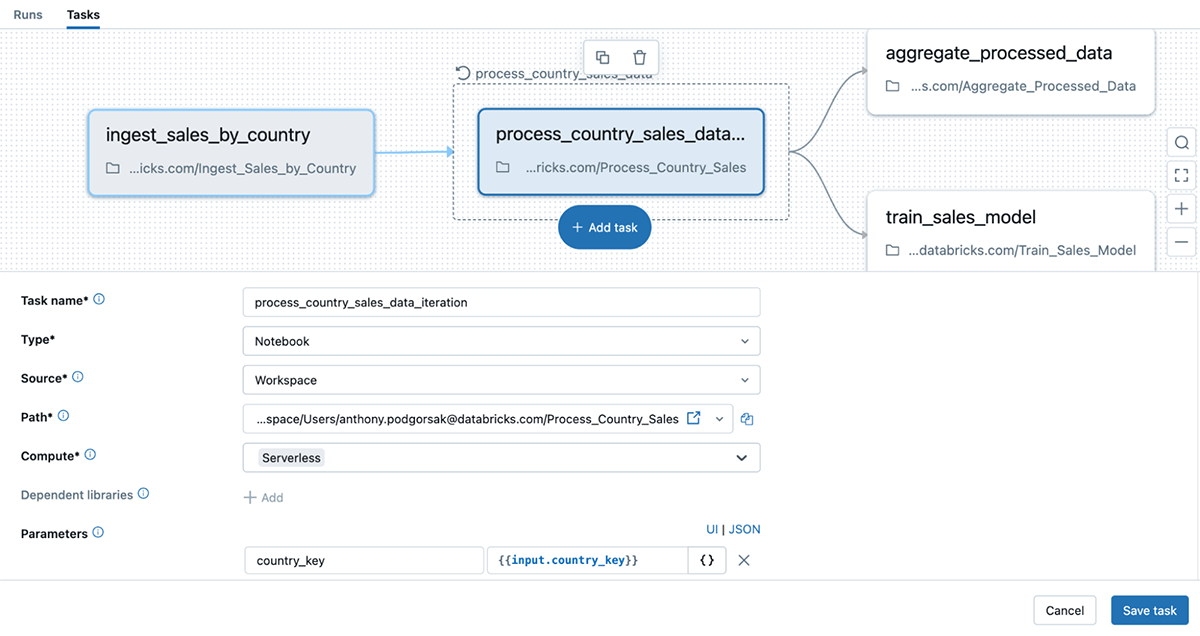

With out For Every, customers are restricted to eventualities the place parameters don’t change ceaselessly. With For Every, the pliability of Databricks Workflows is considerably enhanced by way of the flexibility to loop over absolutely dynamic parameters outlined at runtime with job values, decreasing the necessity for onerous coding. Beneath, we see that the parameters of the pocket book job are dynamically outlined and handed into the For Every loop (you may additionally discover it is using serverless compute, now Typically Accessible!).

Environment friendly processing with concurrency

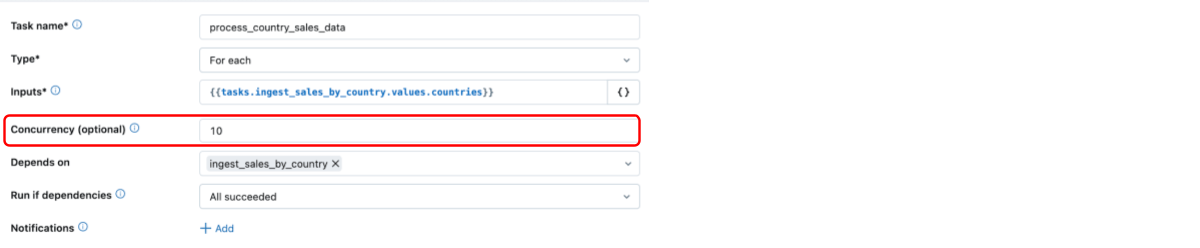

For Every helps actually concurrent computation, setting it aside from different main orchestration instruments. With For Every, customers can specify what number of duties to run in parallel bettering effectivity by decreasing finish to finish execution time. Beneath, we see that the concurrency of the For Every loop is ready to 10, with help for as much as 100 concurrent loops. By default, the concurrency is ready to 1 and the duties are run sequentially.

Debug with ease

Debugging and monitoring workflows grow to be tougher with out looping help. Workflows with a lot of duties may be troublesome to debug, decreasing uptime.

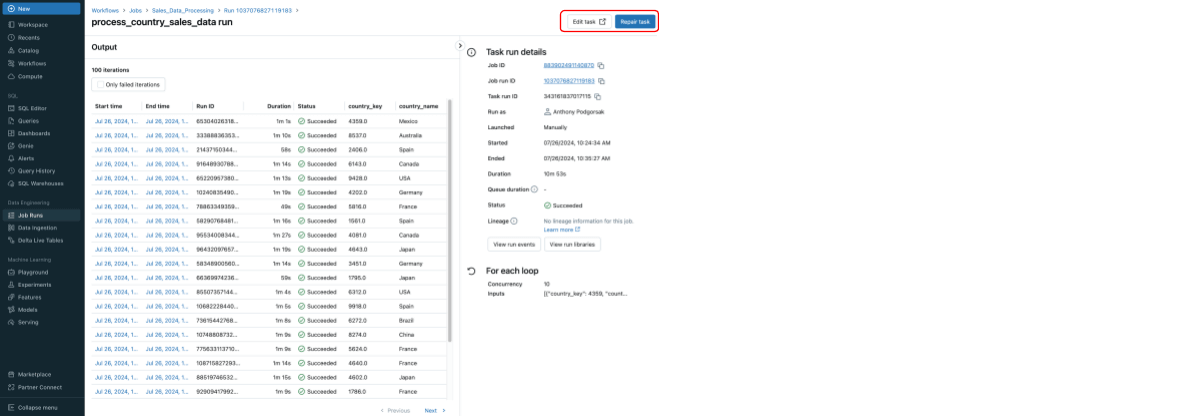

Supporting repairs inside For Every makes debugging and monitoring a lot smoother. If a number of iterations fail, solely the failed iterations shall be re-run, not the complete loop. This protects each compute prices and time, making it simpler to take care of environment friendly workflows. Enhanced visibility into the workflow’s execution allows faster troubleshooting and reduces downtime, in the end bettering productiveness and making certain well timed insights. Beneath reveals the ultimate output of the instance above.

These enhancements additional broaden the extensive set of capabilities Databricks Workflows gives for orchestration on the Knowledge Intelligence Platform, dramatically bettering the consumer expertise, making prospects workflows extra environment friendly, versatile, and manageable.

Get began

We’re very excited to see how you employ For Every to streamline your workflows and supercharge your information operations!

To study extra concerning the completely different job sorts and how one can configure them within the Databricks Workflows UI please seek advice from the product docs