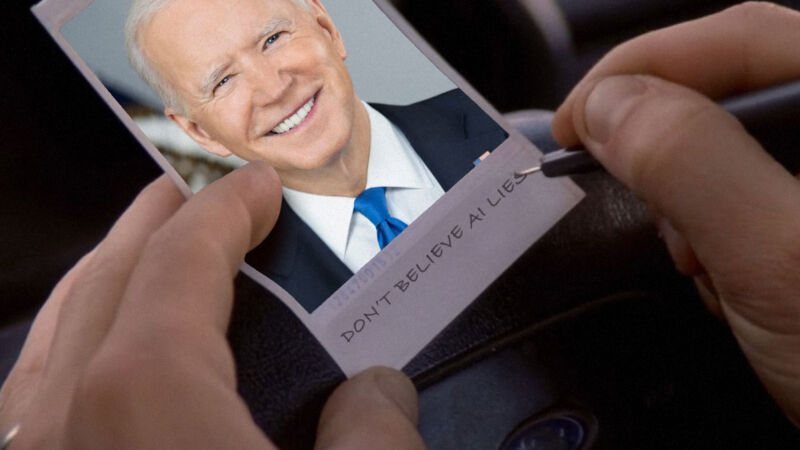

Enlarge (credit score: Memento | Aurich Lawson)

Given the flood of photorealistic AI-generated pictures washing over social media networks like X and Fb nowadays, we’re seemingly getting into a brand new age of media skepticism: the period of what I am calling “deep doubt.” Whereas questioning the authenticity of digital content material stretches again many years—and analog media lengthy earlier than that—quick access to instruments that generate convincing pretend content material has led to a brand new wave of liars utilizing AI-generated scenes to disclaim actual documentary proof. Alongside the way in which, individuals’s current skepticism towards on-line content material from strangers could also be reaching new heights.

Deep doubt is skepticism of actual media that stems from the existence of generative AI. This manifests as broad public skepticism towards the veracity of media artifacts, which in flip results in a notable consequence: Individuals can now extra credibly declare that actual occasions didn’t occur and recommend that documentary proof was fabricated utilizing AI instruments.

The idea behind “deep doubt” is not new, however its real-world impression is changing into more and more obvious. Because the time period “deepfake” first surfaced in 2017, we have seen a fast evolution in AI-generated media capabilities. This has led to current examples of deep doubt in motion, resembling conspiracy theorists claiming that President Joe Biden has been changed by an AI-powered hologram and former President Donald Trump’s baseless accusation in August that Vice President Kamala Harris used AI to pretend crowd sizes at her rallies. And on Friday, Trump cried “AI” once more at a photograph of him with E. Jean Carroll, a author who efficiently sued him for sexual assault, that contradicts his declare of by no means having met her.