We just lately introduced the Basic Availability of our serverless compute choices for Notebooks, Jobs, and Pipelines. Serverless compute offers speedy workload startup, automated infrastructure scaling and seamless model upgrades of the Databricks runtime. We’re dedicated to maintain innovating with our serverless providing and constantly bettering value/efficiency to your workloads. At this time we’re excited to make just a few bulletins that may assist enhance your serverless price expertise:

- Effectivity enhancements that lead to a larger than 25% discount in prices for many prospects, particularly these with short-duration workloads.

- Enhanced price observability that helps observe and monitor spending at a person Pocket book, Job, and Pipeline stage.

- Easy controls (obtainable sooner or later) for Jobs and Pipelines that may permit you to point out a desire to optimize workload execution for price over efficiency.

- Continued availability of the 50% introductory low cost on our new serverless compute choices for jobs and pipelines, and 30% for notebooks.

Effectivity Enhancements

Primarily based on insights gained from operating buyer workloads, we have carried out effectivity enhancements that may allow most prospects to attain a 25% or larger discount of their serverless compute spend. These enhancements primarily scale back the price of brief workloads. These adjustments will probably be rolled out robotically over the approaching weeks, guaranteeing that your Notebooks, Jobs, and Pipelines profit from these updates without having to take any actions.

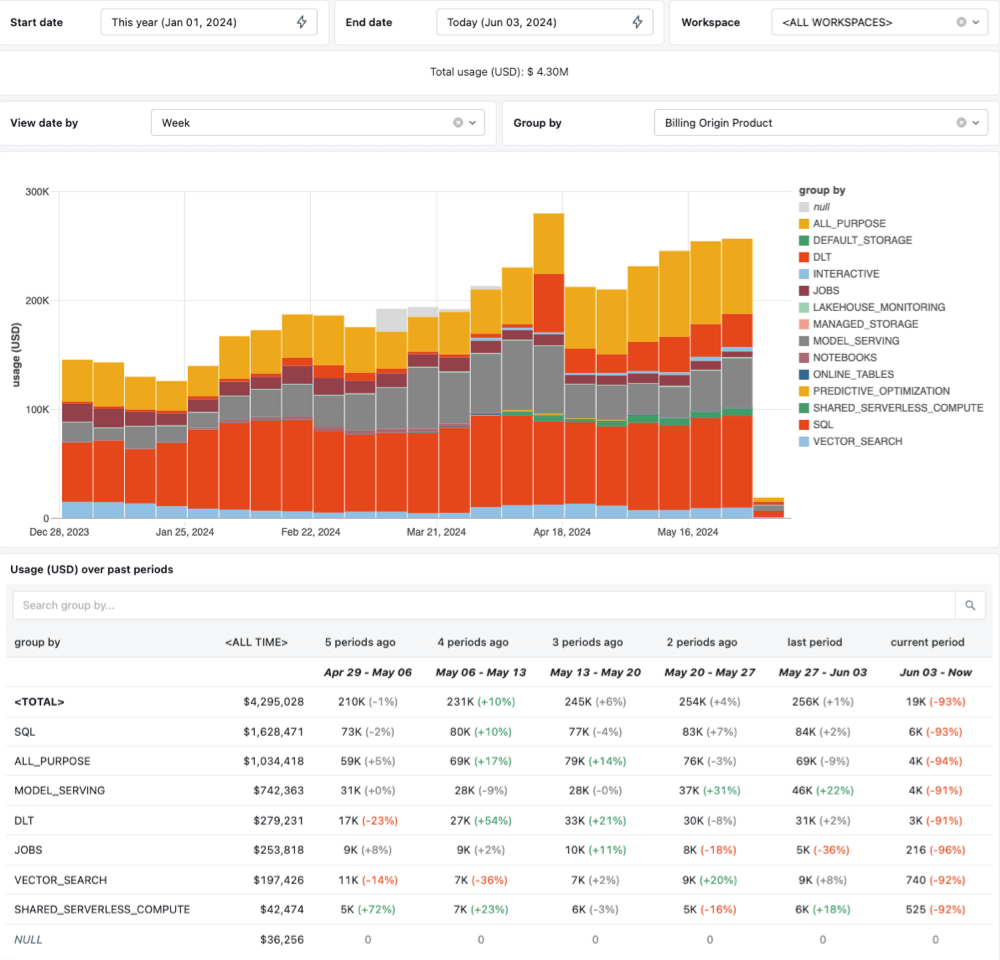

Enhanced price observability

To make price administration extra clear, we have improved our cost-tracking capabilities. All compute prices related to serverless will now be absolutely trackable right down to the person Pocket book, Job, or Pipeline run. This implies you’ll not see shared serverless compute prices unattributed to any specific workload. This granular attribution offers visibility into the total price of every workload, making it simpler to watch and govern bills. As well as, we have added new fields to the billable utilization system desk, together with Job title, Pocket book path, and consumer identification for Pipelines to simplify price reporting. We have created a dashboard template that makes visualizing price traits in your workspaces straightforward. You may study extra and obtain the template right here.

Future controls that permit you to point out a desire for price optimization

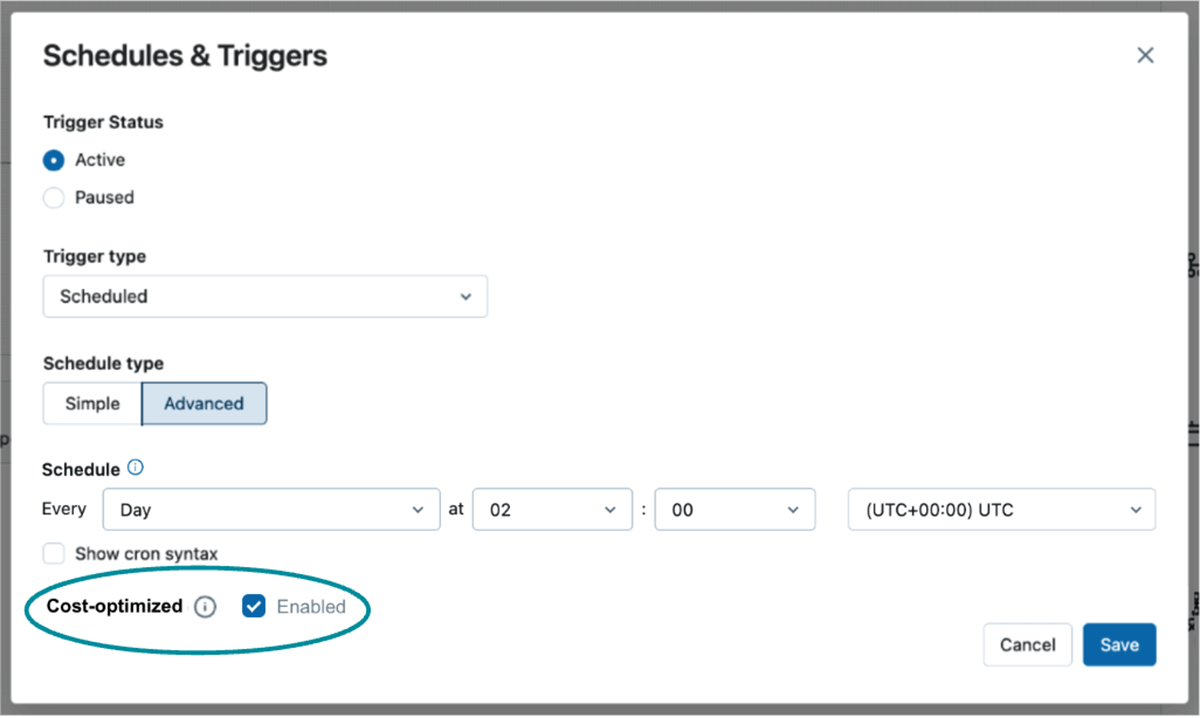

For every of your information platform workloads, it’s worthwhile to decide the best steadiness between efficiency and price. With serverless compute, we’re dedicated to simplifying the way you meet your particular workloads’ value/efficiency targets. Presently, our serverless providing focuses on efficiency – we optimize infrastructure and handle our compute fleet in order that your workloads expertise quick startup and brief runtimes. That is nice for workloads with low latency wants and when you do not need to handle or pay as an illustration swimming pools.

Nevertheless, we’ve got additionally heard your suggestions concerning the necessity for cheaper choices for sure Jobs and Pipelines. For some workloads, you might be keen to sacrifice some startup time or execution pace for decrease prices. In response, we’re thrilled to introduce a set of easy, easy controls that permit you to prioritize price financial savings over efficiency. This new flexibility will permit you to customise your compute technique to higher meet the precise value and efficiency necessities of your workloads. Keep tuned for extra updates on this thrilling improvement within the coming months and sign-up to the preview waitlist right here.

Unlock 50% Financial savings on Serverless Compute – Restricted-Time Introductory Provide!

Make the most of our introductory reductions: get 50% off serverless compute for Jobs and Pipelines and 30% off for Notebooks, obtainable till October 31, 2024. This limited-time supply is the proper alternative to discover serverless compute at a decreased price—don’t miss out!

Begin utilizing serverless compute at present:

- Allow serverless compute in your account on AWS or Azure

- Make certain your workspace is enabled to make use of Unity Catalog and in a supported area in AWS or Azure

- For present PySpark workloads, guarantee they’re suitable with shared entry mode and DBR 14.3+

- Comply with the precise directions for connecting your Notebooks, Jobs, Pipelines to serverless compute

- Leverage serverless compute from any third-party system utilizing Databricks Join. Develop domestically out of your IDE, or seamlessly combine your purposes with Databricks in Python for a clean, environment friendly workflow.