Recommender techniques (RecSys) have develop into an integral a part of fashionable digital experiences, powering customized content material options throughout numerous platforms. These subtle techniques and algorithms analyze person conduct, preferences, and merchandise traits to foretell and suggest gadgets of curiosity. Within the period of huge knowledge and machine studying, recommender techniques have advanced from easy collaborative filtering approaches to advanced fashions that leverage deep studying strategies.

It may be difficult to scale these recommender techniques, particularly when coping with hundreds of thousands of customers or 1000’s of merchandise. To take action requires discovering a steadiness between price, effectivity, and accuracy. A typical strategy to handle this scalability concern includes a two-stage course of: an preliminary, environment friendly “broad search” adopted by a extra computationally intensive “slim search” on probably the most related gadgets. For instance, in film suggestions, an efficient mannequin would possibly first slim the search house from 1000’s to about 100 gadgets per person, after which apply a extra advanced mannequin for exact ordering of the highest 10 suggestions. This technique optimizes useful resource utilization whereas sustaining advice high quality, addressing scalability challenges in large-scale advice techniques.

Many corporations don’t have the assets to construct and scale recommender techniques of this dimension, however Databricks affords all of the important parts — together with knowledge processing, function engineering, mannequin coaching, monitoring, governance and serving — that may be mixed to create a state-of-the-art recommender system, in addition to the technical help assets to assist implement them. This text is the primary in a collection designed to display efficient strategies for coaching and deploying advice fashions at scale on Databricks. On this installment, we give attention to distributed knowledge loading and coaching. Subsequent articles will discover distributed checkpointing, inference, and the mixing of complementary parts, equivalent to vector shops, to create a sturdy, end-to-end recommender system pipeline.

This text presents a collection of reference options that function a sturdy basis for coaching enterprise-scale recommender techniques on the Databricks Information Intelligence Platform. These options use Mosaic Streaming because the dataloader and TorchDistributor because the orchestrator for distributed coaching, each of which have been developed in-house at Databricks. By utilizing TorchRec, a extremely scalable recommender system bundle leveraging PyTorch, we showcase implementations of two superior deep studying fashions that align with the two-stage strategy talked about earlier: the Two Tower mannequin, ideally suited for the environment friendly “broad search” section, and Meta’s DLRM (Deep Studying Suggestion Mannequin), fitted to the extra intensive “slim search” section. Each fashions are able to dealing with hundreds of thousands of customers and gadgets effectively, with the Two Tower mannequin shortly narrowing down the candidate set from doubtlessly hundreds of thousands to 1000’s, and DLRM offering exact ordering of probably the most related gadgets. To facilitate seamless integration into your workspaces and initiatives, we have made these fashions out there by way of the Databricks market.

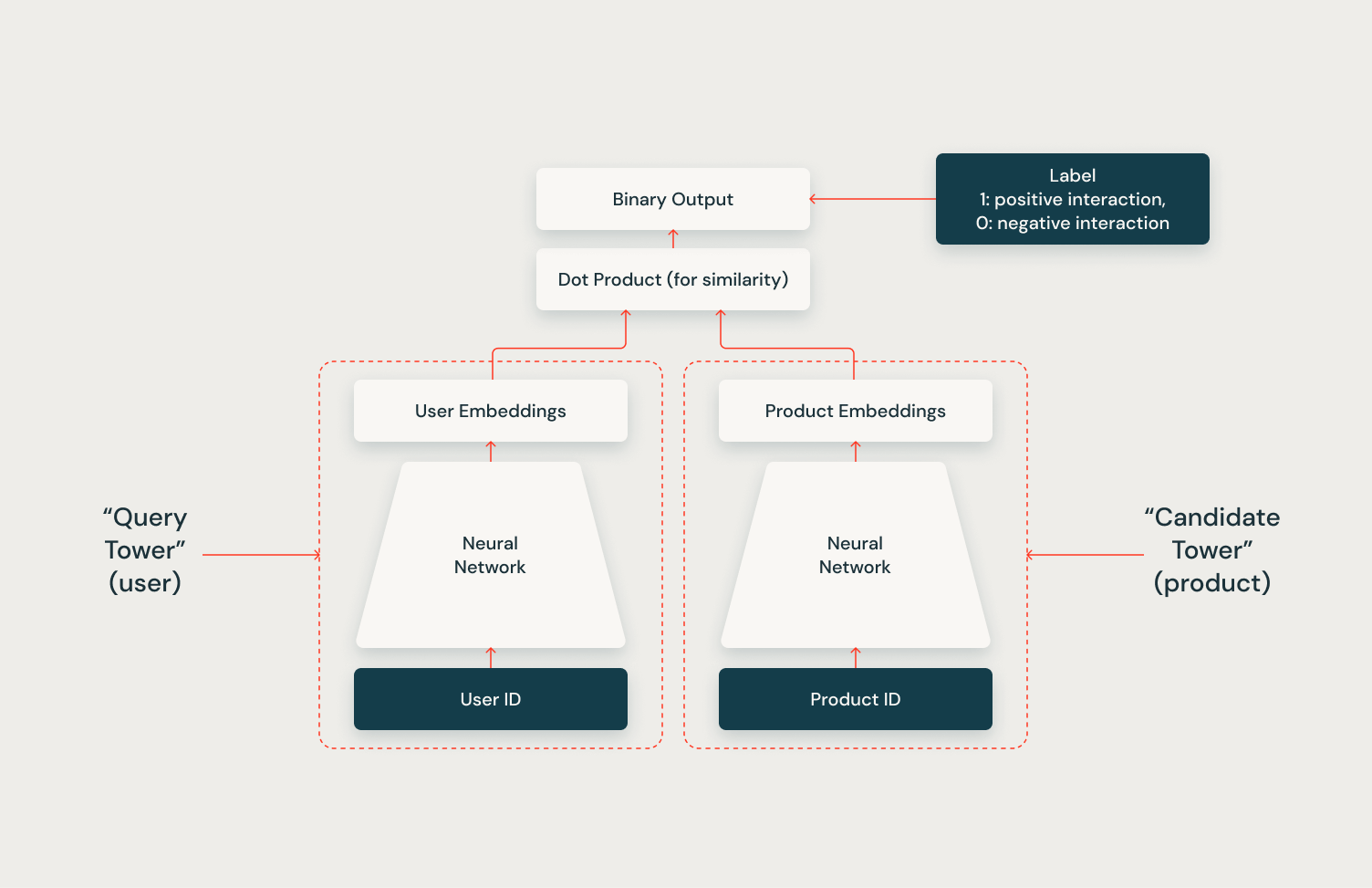

Two Tower

The Two Tower mannequin is an environment friendly structure for large-scale recommender techniques. As illustrated within the diagram, it includes two parallel neural networks: the “question tower” for customers and the “candidate tower” for merchandise. Every tower processes its enter (Consumer ID or Product ID) to generate dense embeddings, representing customers and merchandise in a shared house. The mannequin predicts user-item interactions by computing the similarity between these embeddings utilizing a dot product, enabling fast identification of probably related gadgets from an enormous catalog. This makes it ideally suited for the preliminary “broad search” section in advice techniques.

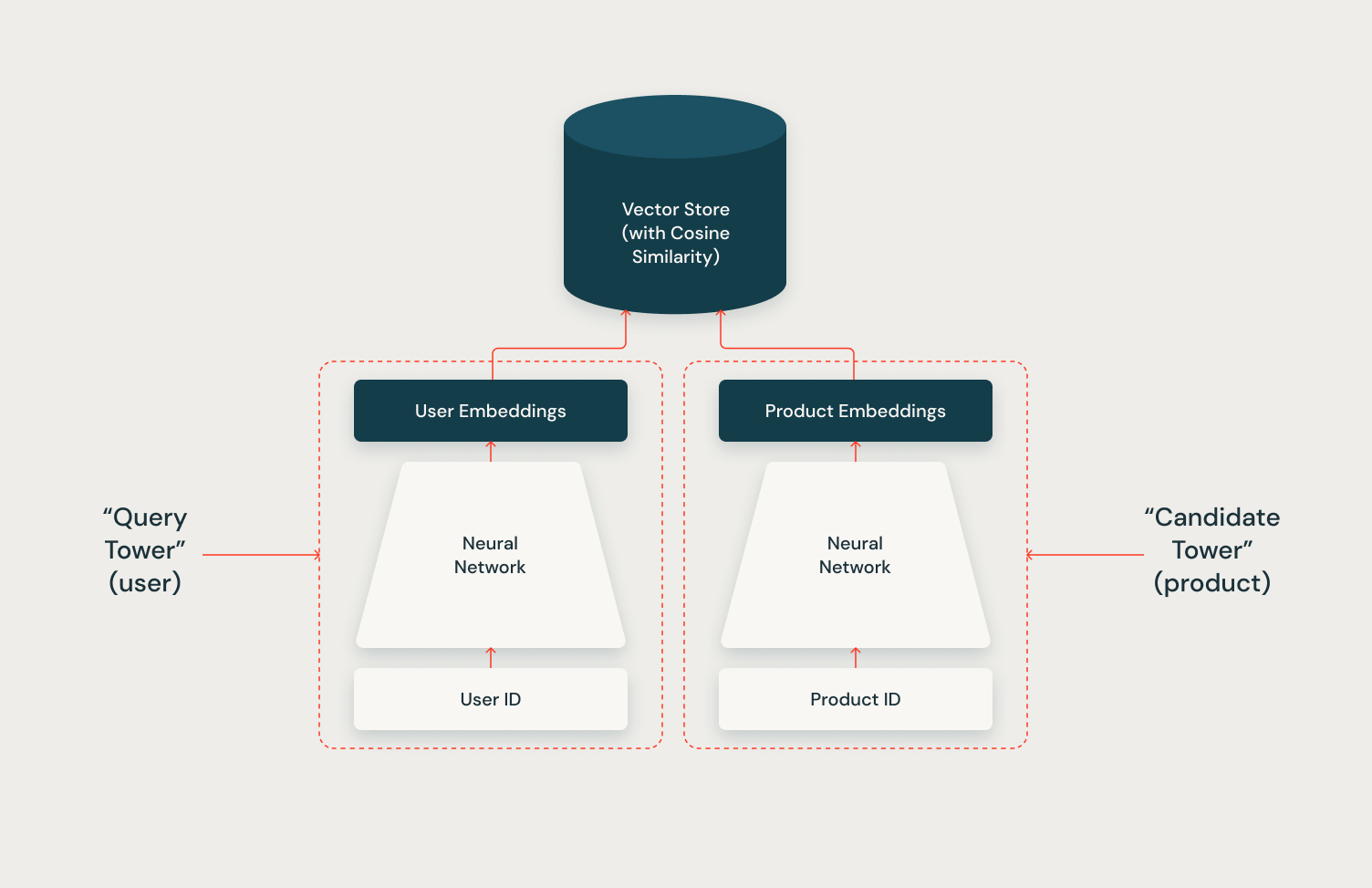

The Two Tower structure’s full potential is realized by way of its integration with a vector retailer. By leveraging a vector retailer to index candidate vectors, the system can effectively and scalably retrieve lots of of related candidates for every person throughout inference. In a future article on this collection, we are going to display learn how to implement this integration utilizing the Mosaic AI Vector Retailer and the Two Tower mannequin, showcasing the ability of this mixed strategy.

DLRM

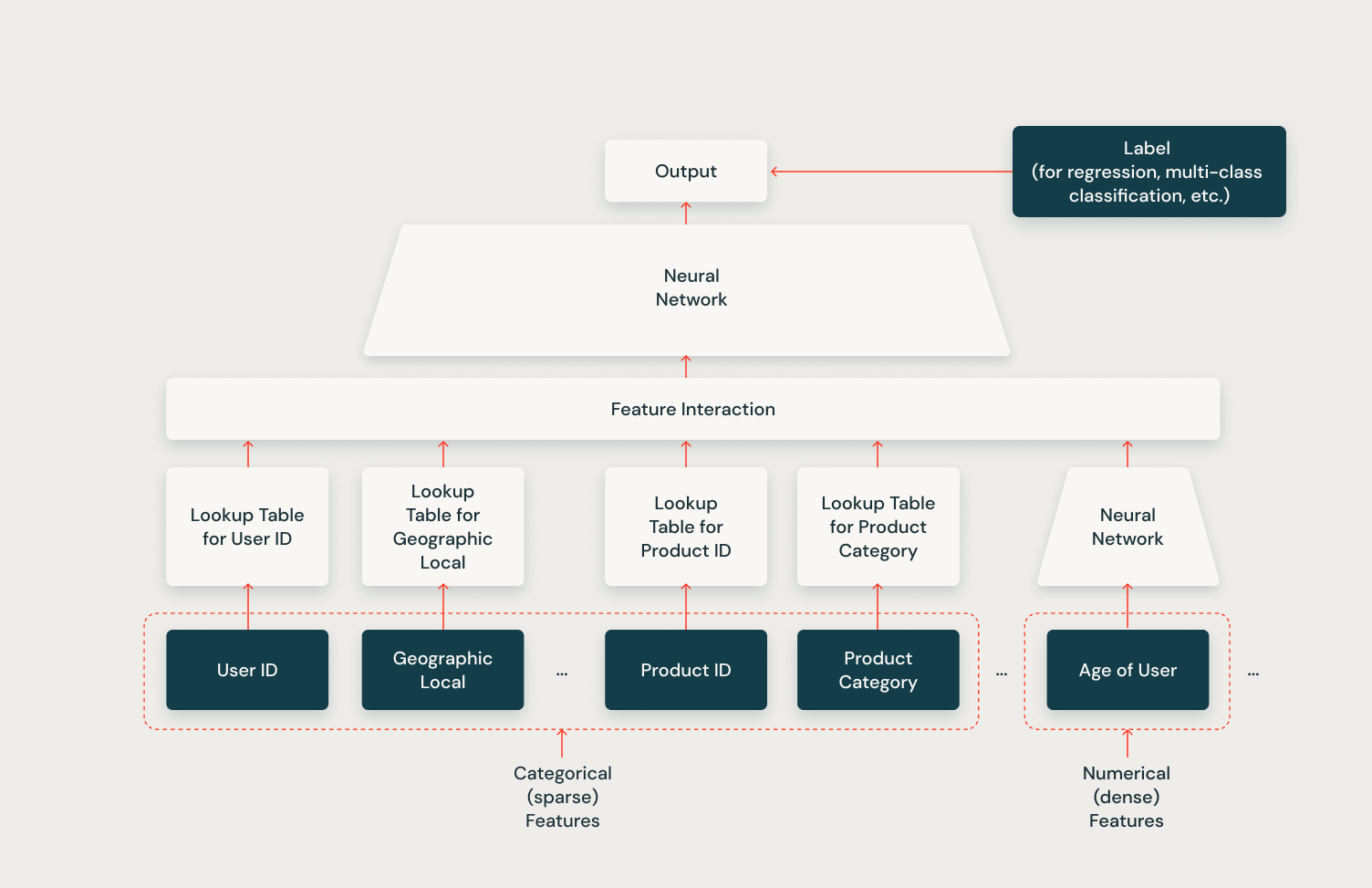

The Deep Studying Suggestion Mannequin (DLRM) by Meta, as illustrated within the following diagram, is a classy structure designed for large-scale advice techniques. It effectively handles each categorical (sparse) and numerical (dense) options, making it extremely versatile for numerous advice duties. The mannequin makes use of lookup tables to embed categorical options, and these embeddings, together with numerical options are then processed by way of a function interplay layer. This layer captures advanced relationships between totally different function sorts. The mixed options are then fed right into a neural community, which additional processes the data to generate the ultimate output. This output can be utilized for numerous duties equivalent to regression or multi-class classification, relying on the precise advice drawback, however is most frequently used for predicting click-through charges. The DLRM’s means to deal with various function sorts and seize intricate function interactions makes it notably efficient within the “slim search” section for exact merchandise rating in advice techniques.

For production-level DLRM mannequin coaching, we suggest leveraging the Databricks Characteristic Retailer. This highly effective device permits the seamless creation of coaching datasets with various function preparations for each customers and gadgets. Whereas the present Databricks documentation gives examples for easier recommender techniques, a future article on this collection will display learn how to combine the Databricks Characteristic Retailer with the fashions mentioned right here.

Find out how to Practice a Suggestion Mannequin

Each examples of coaching advice fashions share an identical general construction, using state-of-the-art strategies for large-scale distributed coaching.

Information Preprocessing and Information Loading with Mosaic Streaming

The examples in these levels leverage Mosaic Streaming, an important device for optimizing the coaching course of on massive datasets saved in cloud environments. This strategy maximizes effectivity, cost-effectiveness, and scalability. When coaching massive recommender techniques, notably those who must accommodate hundreds of thousands of customers and/or gadgets, multi-node coaching is commonly mandatory. Nevertheless, distributed knowledge loading introduces a spread of challenges, together with synchronization points, reminiscence administration, and reproducibility throughout runs.

Mosaic Streaming is purpose-built to handle these challenges. It is particularly designed to help multi-node, distributed coaching of huge fashions, with a give attention to making certain correctness ensures, optimizing efficiency, offering flexibility, and enhancing ease-of-use. By tackling these important features, Mosaic Streaming permits seamless scaling of recommender techniques whereas mitigating the widespread pitfalls related to distributed coaching environments.

The preprocessing stage includes a number of steps:

- Gathering coaching knowledge from a desk in Unity Catalog

- Performing mandatory knowledge transformations

- Using Mosaic Streaming’s dataframe_to_mds API to materialize the processed knowledge right into a Unity Catalog Quantity

def save_data(df, output_path, label, num_workers=40):

print(f"Saving {label} knowledge to: {output_path}")

mds_kwargs = {'out': output_path, 'columns': columns, 'compression': compression}

dataframe_to_mds(df.repartition(num_workers), merge_index=True, mds_kwargs=mds_kwargs)

save_data(train_df, output_dir_train, 'prepare')

save_data(validation_df, output_dir_validation, 'validation')

save_data(test_df, output_dir_test, 'check')We then use Mosaic AI StreamingDataset and StreamingDataLoader APIs in our coaching perform to simply load the related knowledge for every node in a distributed surroundings. Observe that StreamingDataLoader is required if you happen to want mid-epoch resumption. If that’s not wanted, utilizing the native Torch DataLoader is ok as effectively!

def get_dataloader_with_mosaic(path, batch_size, label):

print(f"Getting {label} knowledge from UC Volumes")

dataset = StreamingDataset(native=path, shuffle=True, batch_size=batch_size)

return StreamingDataLoader(dataset, batch_size=batch_size)

train_dataloader = get_dataloader_with_mosaic(input_dir_train, args.batch_size, "prepare")

val_dataloader = get_dataloader_with_mosaic(input_dir_validation, args.batch_size, "val")

test_dataloader = get_dataloader_with_mosaic(input_dir_test, args.batch_size, "check")Parallelizing Mannequin Coaching with TorchRec and the TorchDistributor

Recommender techniques that must scale to hundreds of thousands of customers or gadgets can develop into overwhelming for a single node to deal with. Because of this, scaling to a number of nodes usually turns into mandatory for coaching these massive deep advice fashions. To handle this problem, options leverage a mix of PyTorch’s TorchRec library and PySpark’s TorchDistributor to effectively scale advice mannequin coaching on Databricks.

TorchRec is a domain-specific library constructed on PyTorch, geared toward offering the required sparsity and parallelism primitives for large-scale recommender techniques. A key function of TorchRec is its means to effectively shard massive embedding tables throughout a number of GPUs or nodes utilizing the DistributedModelParallel and EmbeddingShardingPlanner APIs. Notably, TorchRec has been instrumental in powering a number of the largest fashions at Meta, together with a 1.25 trillion parameter mannequin and a 3 trillion parameter mannequin.

Complementing TorchRec, TorchDistributor is an open supply module built-in into PySpark that facilitates distributed coaching with PyTorch on Databricks. It’s designed to help all distributed coaching paradigms supplied by PyTorch, equivalent to Distributed Information Parallel and Tensor Parallel, in numerous configurations, together with single-node multi-GPU and multi-node multi-GPU setups. Moreover, it gives a minimal API that enables customers to execute coaching on capabilities outlined throughout the present pocket book or utilizing exterior coaching recordsdata. An instance utilization of the TorchDistributor is as follows:

from pyspark.ml.torch.distributor import TorchDistributor

import torch.distributed as dist

import os

def most important():

# primary setup of related variables

local_rank = int(os.environ["LOCAL_RANK"])

global_rank = int(os.environ["RANK"])

system = torch.system(f"cuda:{local_rank}")

torch.cuda.set_device(system)

# initializing course of group

dist.init_process_group(backend="nccl")

# TRAINING LOOP USING `system` because the GPU to attribute to

# cleansing up course of group

dist.destroy_process_group()

# non-obligatory output to return

return output

# this association makes use of 8 GPUs in your databricks cluster for distributed coaching

output = TorchDistributor(num_processes=8, use_gpu=True, local_mode=False)The mix of TorchRec and the TorchDistributor permits the environment friendly dealing with of huge datasets and complicated fashions typical in enterprise-grade advice techniques.

Logging with MLflow

Within the reference options supplied, we use MLflow to log key gadgets, like mannequin hyperparameters, metrics, and the mannequin’s state_dict. Observe that whereas the strategy taken within the instance notebooks collects the distributed mannequin onto one node earlier than saving to MLflow, this wouldn’t work for fashions which can be too huge to suit on one node. To handle this concern, the following article on this collection will go into element on learn how to do distributed mannequin checkpointing and large-scale mannequin inference on Databricks.

Subsequent Steps

On this article, we launched reference options for learn how to implement and prepare extremely scalable deep advice fashions on Databricks. We briefly mentioned the Two Tower structure, the DLRM structure and the place they match contained in the prolonged recommender system pipeline. Lastly, we delved into the specifics of distributed knowledge loading and distributed mannequin coaching of those advice fashions on Databricks. That is simply the beginning: in future articles on this collection, we are going to talk about extra features of productionizing recommender techniques, together with distributed mannequin saving, inference, and integration with different instruments on Databricks.